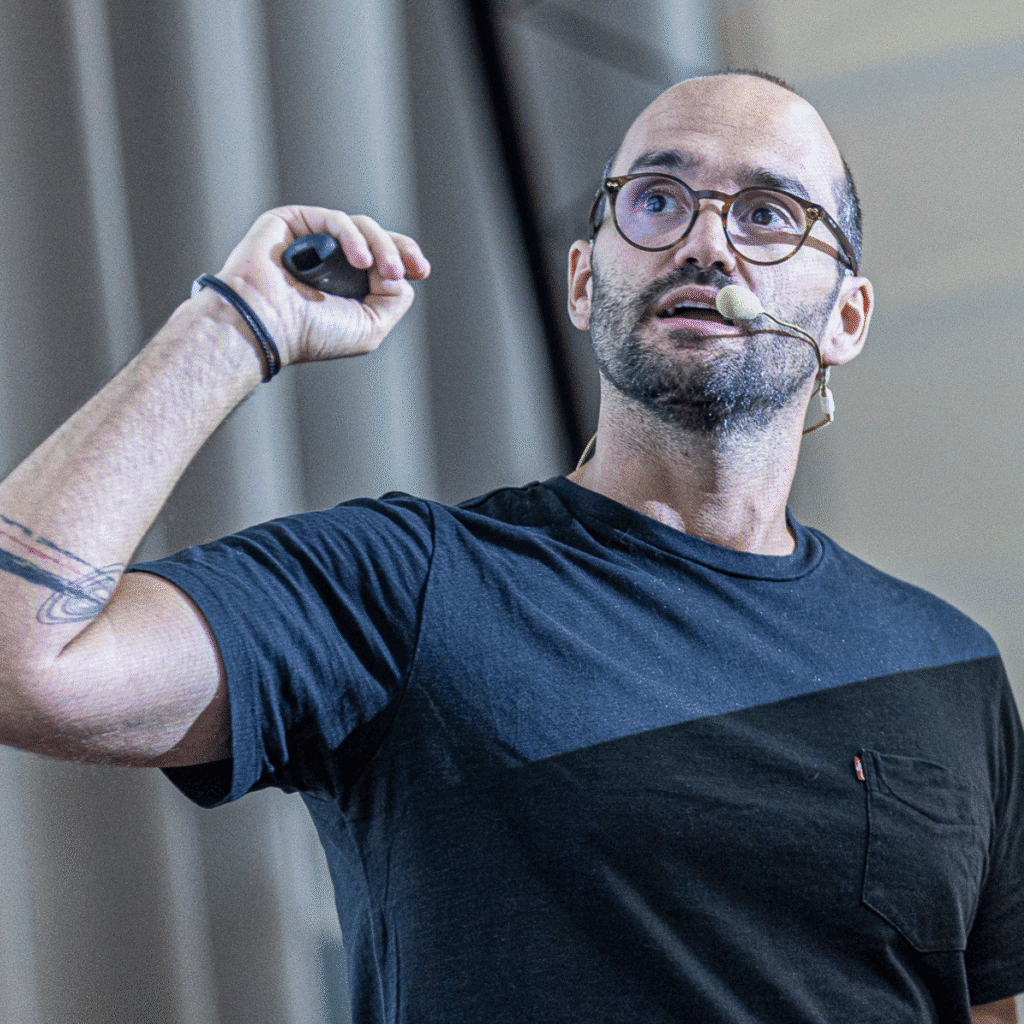

Keynote speaker at Data Point Prague 2025 shares the inside story on TMDL, PBIP, and why Prague was the right fit for his first-ever keynote.

FROM COMMUNITY MVP TO MICROSOFT PRODUCT TEAM

Rui, thank you for taking the time to talk with us – we really appreciate it. Your work has had a big impact on the Power BI community, and we’re thrilled to introduce you to more people here in Prague ahead of your keynote at Data Point 2025. Let’s jump right in.

Could you tell us a bit about your background and what you’re currently doing at Microsoft?

OK guys, firstly, thanks for having me – it means a lot…and let me start with a little bit of history.

I’m a former Power BI developer. I worked in consulting for about 15 years, starting as a developer and eventually managing data teams at a company called DevScope. I was also a Microsoft Data Platform MVP, and I became known for talks about Power BI hacks – tips and tricks that, luckily, aren’t needed so much anymore. These included things like version control with PBIX files, using internal APIs, and optimizing development through manual workarounds or community tools like pbi-tools or Tabular EditorI felt all the same pain points as any Power BI dev.

“I always had a dream of joining Microsoft – more specifically, the Power BI team.”

So this was a long-standing journey, not just something you jumped into recently.

Exactly. I always had a dream of joining Microsoft – more specifically, the Power BI team. I’ve been working with Microsoft BI technologies since SQL Server 2000. I saw it all: Power Pivot, Analysis Services multidimensional, then tabular… and when Power BI came along, it completely changed the game.

What really excited me was the ability to spin up an Analysis Services model in seconds. I come from the days when just setting up the environment took one or two weeks. Clusters, hardware, configuration – the works.

So I fell in love with Power BI right from the start. Eventually, I got the opportunity to join Microsoft – first as part of the Power BI CAT team.

For those who might not know – what exactly does the CAT team do?

It’s technically part of the product team, but it’s more like the voice of enterprise customers within the team. It’s still a kind of consulting role – you talk to customers, understand their pain points, and bring that feedback directly to the product group.

I was lucky to join under Chris Webb – an amazing manager – and I got to work with legends like Phil Seamark, Adam Saxton, Patrick LeBlanc, Alex Powers, Matthew Roche, and many others. Honestly, it’s a dream team of Microsoft data professionals.

And especially Chris Webb – I think I told him this, but he was the first person I ever followed in the data space. His blog helped me a lot early in my career.

FROM CAT TEAM TO PRODUCT MANAGER

You mentioned working with some real legends on the CAT team. What made you shift from that role into a more feature-focused position as a PM?

I really enjoyed my time on the CAT team, but when the opportunity arose to become a Product Manager working on features I’m truly passionate about – like Developer Mode, TMDL, PBIR, and Git Integration – I couldn’t pass it up. I’m now a PM on the Power BI team in Fabric, focusing on features to make the enterprise-grade pro developer successful with Power BI in Fabric.

What’s the goal behind those features – or your mission, let’s say – as a PM?

I started on the Enterprise BI team with Christian Wade, and the goal was clear: make Power BI work for professional developers.

We’re talking about people who work in teams, who need CI/CD, version control, repeatable processes – people like I used to be. We want Power BI to support that developer persona in a real, first-class way.

“We want Power BI to support the developer persona in a real, first-class way.”

You’re talking about making Power BI “enterprise dev ready” without losing its self-service DNA, right?

Exactly. Power BI is – and always will be – a self-service tool. That’s its strength.

But that doesn’t mean devs should have to use external tools, hacks, or workarounds just to get things like source control or automated deployment.

And while we’ll never turn Power BI Desktop into Visual Studio – we came from that world – we aim to deliver a streamlined experience for users who prefer coding, while deepening integration with best-in-class development tools like Visual Studio Code.

We just need to do it without making the product intimidating or unusable for regular business users.

TMDL: BORN FROM THE COMMUNITY

You mentioned one feature earlier that we need to spend more time on: TMDL. It’s clearly a game-changer for Power BI developers. Before TMDL, we had to rely on all kinds of hacks or external tools to version PBIX files.

Now with TMDL and the new project file format, everything’s cleaner, more controllable. Can you tell us how it started – and what makes it so powerful?

Yeah, I’d love to talk about TMDL. One of my colleagues even joked on Twitter a while ago: “Find a partner that loves you the way @RuiRomano talks about TMDL.” And yeah – it’s true. It was love at first sight.

“TMDL came from the community – and that’s what I’m most proud of.”

But I don’t want to take all the credit. One of the things I’m most proud of is that TMDL came from the community.

It was started by Mathias Thierbach, who worked super closely with the Product team as part of the Community Program. He was one of the main architects early on.

When I joined the team, TMDL was already in motion. My job was to help guide it safely to where it needed to go. It’s been a team effort – Microsoft and the community – and I’m really proud of that, especially coming from my own background as an MVP.

That’s the kind of origin story people love to hear – a big Microsoft feature that actually grew out of real-world dev pain. But what was the core purpose of TMDL when you first started shaping it?

At its core, we needed a clean, readable, source control–friendly format for semantic models. JSON – the old format – just didn’t cut it. It was hard to read, hard to merge, and impossible to work with when it came to DAX and Power Query code.

You couldn’t just open it up, copy and paste a measure, or compare two versions easily. So one of the big goals was to fix that: better readability, better merge conflict resolution, and just a better dev experience overall.

But TMDL is more than “just a better file format,” right?

Absolutely. TMDL isn’t just a format – it’s a language.

And that’s where it gets really exciting. Because it’s a language, we can build a whole code experience around it. In fact, we already have.

You can use Visual Studio Code with the TMDL extension we built – it gives you things like semantic highlighting, IntelliSense, tooltips, error diagnostics, fixing duplicate lineage tags… everything you’d expect from a real programming environment.

“It’s semantic modeling, as code.”

And that fits beautifully into modern dev workflows. How close are we to being able to create entire models just with TMDL?

We’re getting there. Right now, it’s ideal for certain tasks – like adding a new table, creating a set of measures – where opening Power BI Desktop isn’t worth the time.

In the near future, I can see people using auto-snippets or templates to create entire semantic models in TMDL. The point is: it’s about choice.

If you’re comfortable in code, now you’ve got the tools to stay there.

OK, let’s bring in a hot topic – AI. With TMDL being code, what kind of opportunities does that open up?

That’s a huge part of why TMDL is such a disruptive shift.

We’re living in the age of GenAI, and one thing AI is extremely good at is working with code. If your semantic model is represented in code, then AI can help you write it, refactor it, repeat tasks, and more.

And because TMDL is readable, you can see what the AI is doing and instantly know whether it’s right or wrong and continue iterating.

So it’s not just about better source control – it’s about making you more productive as a dev in this new AI-driven landscape.

PBIP, PBIR & DEVELOPER MODE

One thing we haven’t touched on yet – but it’s essential – is the relationship between TMDL and the PBIP format. Because to fully leverage TMDL, you need to move away from PBIX and start using PBIP, right?

Yeah, let me unpack that a bit.

First, we actually shipped TMDL before PBIP – around April 2023. That initial version was for Analysis Services users working with tools like Tabular Editor. We added it as a serialization option in the TOM API.

PBIP came later, in June 2023, but even then, the model part was still saved using the older JSON-based BIM format.

And PBIP wasn’t just a technical shift – it changed the way Power BI projects were structured.

Exactly. PBIP introduced two new things:

- The TMDL format for semantic models

- And the PBIR format for reports.

Before PBIR, reports were stored as a single JSON file called report.json, also referred to as PBIR-Legacy. This format was never officially supported or documented for making modifications. PBIR represents a significant advancement—it’s now fully supported to edit Power BI reports using code, and it’s backed by comprehensive documentation, including updated JSON schemas published monthly.

Now, when you save as PBIP, you get both: the model in TMDL, and the report in PBIR. And yes, this is source control–friendly, but that’s not the only reason this matters.

Right – people often think “PBIP is just for Git,” but it’s bigger than that. What else does it unlock?

That’s exactly it. People see PBIP and developer mode and go: “Oh, this is just for versioning.” But it’s way more than that.

“PBIP isn’t just for Git – it’s for developers who want to build smarter.”

The PBIX file is still the default – and I think it always will be, because it’s easy. If I want to send you a report, I can just drop the PBIX in OneDrive or Teams. Done.

But PBIP is a different thing. It’s for pro devs who want to better collaborate with each other, to work with code, be in control, inspect what’s going on, reuse components, and build reusable templates.

So PBIP opens up new workflows, not just cleaner commits.

Exactly. You can inspect a measure, a Power Query step – without opening Power BI Desktop.

That’s a huge productivity win. Especially with large files where Desktop takes a while to load. You can copy/paste code, pull in calendar tables, theme files, even template pages.

We see PBIP as a foundation for reusable, modular BI development – not just version control.

That sounds great in theory – but Power BI has had a reputation in the past for breaking if you touched files outside Desktop. What changed?

You’re absolutely right – and that’s where the hardening work comes in.

PBIP isn’t just “dumping files into a folder.” It’s a supported, code-based development model. And that means we’ve had to make Power BI Desktop OK with changes made by external tools or by hand.

That’s been a major investment: we’ve published schemas, built validation into Desktop, and now we’re working on opening even more scenarios.

For example, right now, if a semantic model has multiple partitions, Desktop can’t open it – that’s changing. With TMDL View, we’re making it possible to open and edit these models even if they include advanced features Desktop doesn’t have UI for.

And I guess this also improves how external tools interact with Power BI?

Definitely. Today, if you use Tabular Editor to create a new table or edit Power Query code, things might break. But once we finish this hardening work, that goes away.

Power BI Desktop and external tools will coexist better, giving developers real flexibility and power.

CODE. COLLABORATE. ACCELERATE.

Let’s talk about your keynote at Data Point Prague. It’s titled Code, Collaborate, Accelerate – and even just the name feels packed with intention. Can you break it down for us?

Yeah, definitely. I named it Code, Collaborate, Accelerate because those three words capture where we are now in the evolution of Power BI development.

- Code is all about what we’ve just talked about. Power BI Desktop now opens up your entire project in a code-friendly format – TMDL, PBIR, PBIP. You can finally see and understand what’s under the hood.

- Accelerate is what happens once you’ve got that control. Now you can script things. You can automate repetitive tasks. You can apply patterns across many files. You can use AI to help you. That’s a big deal – especially for self-service users who suddenly find themselves maintaining 1,000 reports and need to change a logo across all of them.

- And Collaborate – that’s about bringing this all into a team environment. Using Git. Working with others. Building solid CI/CD pipelines. Power BI development has become collaborative in a way it never was before.

The point of the session is to show developers that there’s a new way to work – and even if you’re not a hardcore coder, knowing these capabilities exist can save you days of work or unlock totally new ways to build.

Love that. So, let me ask – why did you agree to speak at Data Point Prague?

Well, first off, I’m always open to community and conference events – I try to say yes whenever I can.

It’s not always easy – I have three kids, and anytime I go somewhere I need to figure out the logistics back home. But this time, the timing worked out. And honestly, when you guys asked me to do the keynote, that really caught my attention.

I’m not the “big roadmap keynote” kind of person. I’m not going to do a sweeping overview of Microsoft Fabric from end to end. I’m focused – I care about the Pro Dev experience. And you told me that’s exactly what your audience wants – more depth, more advanced topics. That really clicked with me.

This is actually my first keynote ever, and I love the challenge. The conference looked well organized, good attendance, strong energy. It felt like the right fit.

We’re absolutely thrilled to have you. Final question – and this is just for fun. You’ve said before that when you visit a new country, you like to try the local food. Have you looked up any Czech specialties?

Nope – and that’s on purpose!

I try not to research the food ahead of time. I like to arrive with no expectations, just go out and try what’s traditional. I did that once in Poland – loved all the soups – and then afterward I looked up what was in them and thought, “yeah, maybe I wouldn’t have tried that if I’d known.”

So I trust you guys to point me in the right direction. Just tell me what I should try, and I’ll be all in.

If you don’t have your ticket yet, head to the Data Point Prague website and secure yours today!

The conference kicks off on Thursday, May 29, with 5 hands-on precons, followed by 4 stages of data-packed sessions on Friday, May 30, where Rui Romano opens the day with his keynote at 9:00.